NVIDIA Reveals HGX H200: AI Accelerator Based On Hopper Architecture With HBM3 Memory

NVIDIA announced the HGX H200, a new hardware-based artificial intelligence computing platform. Built on NVIDIA Hopper architecture, the device features an H200 Tensor Core GPU.

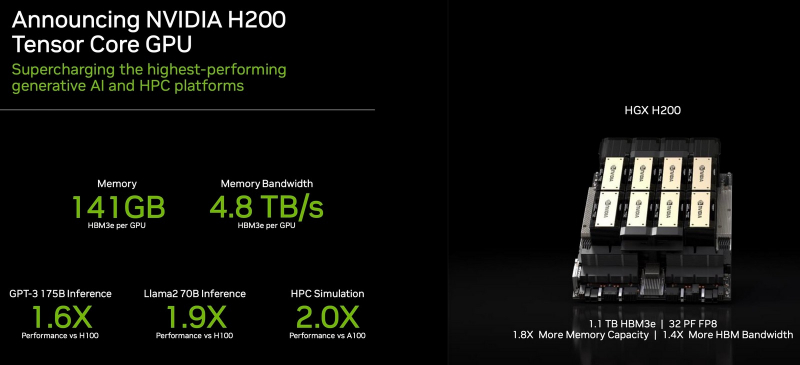

The NVIDIA H200 is the first GPU to feature HBM3e memory, which is faster than regular HBM3. NVIDIA H200 received 141 GB of HBM3e memory with a speed of 4.8 TB / s, which is almost twice as much in volume and 2.4 times as much in bandwidth compared to the memory of the previous generation NVIDIA A100 accelerator. For comparison, the H100 has 80GB of HBM3 at 3.35TB/s, while AMD’s upcoming Instinct MI300X will feature 192GB of HBM3 at 5.2TB/s.

Due to the memory upgrade, the H200 will provide a significant increase in performance in the work of already trained artificial intelligence systems. For example, NVIDIA promises an increase in the speed of the large language model Llama 2 with 70 billion parameters by 1.9 times compared to the H100. And the new product will speed up the work of a trained GPT-3 model with 175 billion parameters by 1.6 times.

H200 accelerators can be deployed in any data center: local, cloud, hybrid, edge. NVIDIA’s global ecosystem of partner manufacturers, including ASRock Rack, ASUS, Dell Technologies, Eviden, GIGABYTE, Hewlett Packard Enterprise, Ingrasys, Lenovo, QCT, Supermicro, Wistron and Wiwynn, can upgrade their existing systems with the H200. Amazon Web Services, Google Cloud, Microsoft Azure and Oracle Cloud Infrastructure will be among the first cloud service providers to deploy H200-based stations starting next year.