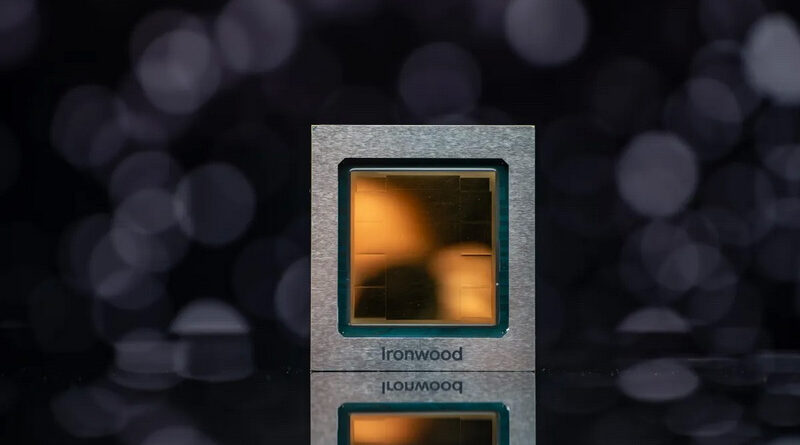

Google Unveils Ironwood TPU for Large-Scale Inference with Record Performance

At the Next ‘25 conference in Las Vegas, Google announced the seventh-generation Ironwood tensor processor, the first in the TPU line designed exclusively for inference tasks (the stage of using ready-made neural networks to solve practical problems). If training an AI model is the process of “training” the algorithm on huge data, then inference is the final stage, when the model is ready to respond to requests: generate text, process images, or predict data.

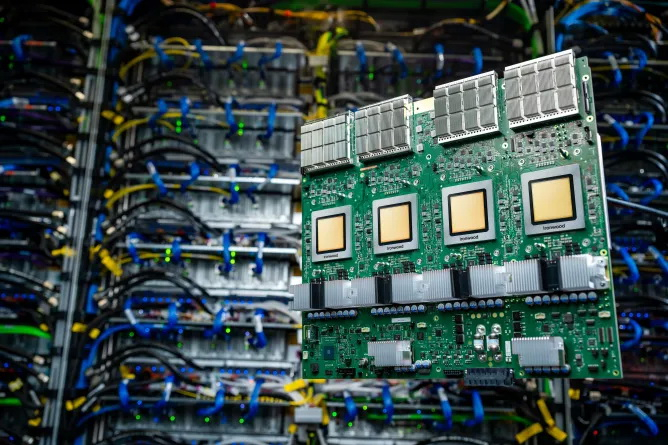

Google says Ironwood has a peak computing performance of 4,614 teraflops, or 4,614 trillion operations per second. So a cluster of 9,216 of these chips would offer a performance of 42.5 exaflops.

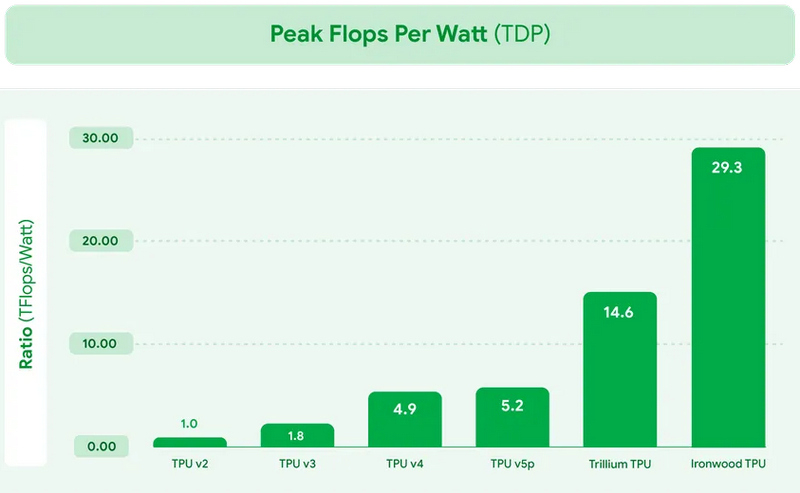

Each processor has 192GB of dedicated RAM with 7.4 Tbps of memory bandwidth. The chip also includes an advanced, specialized SparseCore core for handling the types of data common in “advanced ranking” and “recommender systems” workloads (like an algorithm that suggests clothes you might like). The TPU architecture is optimized to minimize data movement and latency, which Google claims results in significant power savings.

“We built Ironwood for an era where it’s not just the data a model needs, but also its ability to act once trained,” said Vahdat. “The demand for training and serving AI models has grown 100 million-fold over the last eight years, and our investment in TPU is helping set new standards.”